Week 8

This week few edits and updates were made to the site. Like last week, nearly all efforts were spent on completing the project proposal in time for review and feedback. Chapters 2, 3 and 4 were completed and proofreading was done.

Week 9

This week was spent correcting mistakes and suggestions as proposed by Dr. Ejaz. Machine Learning information was moved to Chapter 2, further detailed explanation was done on Chapter 3 and Chapter 4 was updated and expanded. Most of the time was spent collaborating with each other to update proposal and website.

Week 7

This week, minor edits were made to the website based on last week's criticisms. Most of our time was spent on working on the proposal report which was started in week 6. So far, chapter 1 has been finished for the most part (although things may be subject to change) and chapter 2 and 3 are being worked on. Also note there was no meeting this week.

Week 6

Updates made this week included redesigning the home page of the website, changing a bit of the project rubric, updating budget, and figuring out the scope and components of the project acknowledging that one glove is used as the bare minimum.

Engineering Specifications

Updates made to this section included finishing up the justification and verification of the table that will help explain what and how the components will be tested to prove validity. The table was also snipped and pasted rather than using a table viewer due to unforeseen formatting issues that the website had.

Project Rubric & Budget

Last week, the issue with our rubric was that a part of it was not descriptive or technical enough to pass as potential criteria base for the project. This week, adjustments were made that better described the range for each section in the functionality row. Our target is to aim for a device that transfers letters/gestures in (1-2 sec) with at least an 85% accuracy rate. The table for budget was also updated to reflect the components being used along with their prices and links to their respective websites as well.

Components and scope of the project

Last week it was decided that we would focus on one glove being able to function with letters/numbers and also gestures that require only one hand. In nearly all of the sections described above, we did acknowledge the potential for a 2nd glove if the odds were in our favor.

One Glove

-

Sensor Module

The first item here is the compression copper gloves that is basically the foundation for all to-be-built for this project. We notices that the gloves were copper and wondered if we could use that to our advantage in providing more accurate sensor readings for letters such as "R", "U" and "V". The flex sensors will all connect to the microcontroller specifically 5 out of the 8 analog pins. It is unsure how a 2nd glove will connect as off now. The proposed microcontroller being used has a built-in 9-axis inertial axis that contains technologies of an accelerometer and gyroscope so there is not need for an extra component with one glove.

-

Power Module

The power this device takes is 3.3V which is low-power. In this case, there will be no need for a voltage converter since the MPU-6050 is built in to the proposed microcontroller.

-

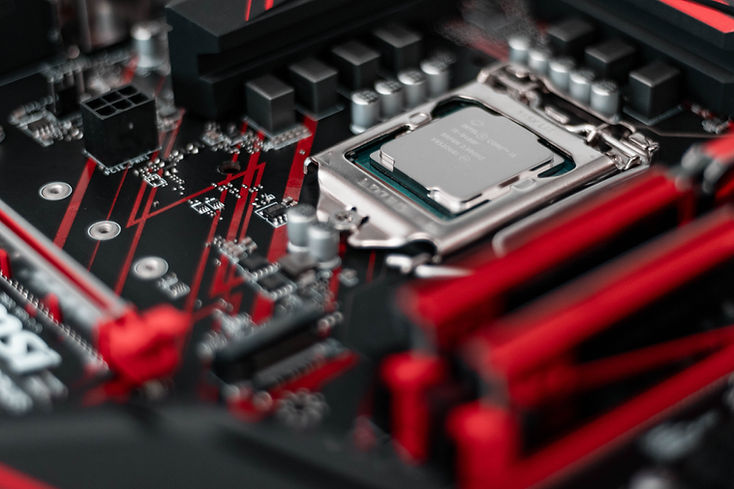

Control Module

The microcontroller being used is an Arduino NANO 33 BLE Sense that has many functions as listed above and is also Bluetooth which can act as a client or host. The requirements we need for a microcontroller was for it to be small, have an I2C and/or SPI channel, have enough I/O pins for the design, high clock speed, and enough RAM for machine learning.

One of the biggest concerns was if this was able to have machine learning performed on it. Luckily, there is experimental port called TensorFlow Lite for microcontrollers (TinyML) that is compatible with this device. The port also demonstrated gesture classification algorithms as well. The process taken to implement this is to create a TensorFlow model, convert model to TensorFlow Lite FlatBuffer, Convert the FlatBuffer to a C byte array, integrating it for microcontrollers C++ library and deploying the device. Some of the limitations for this are that there are limited subset of supported operations, and that training is not supported. We don't really know what "training not supported means". If classification can be done, could that mean that data could be trained before being formatted and compiled into the microcontroller? Of course if this hinders development of our project, we may have to explore other microcontrollers or even other options.

Two Gloves

-

Bluetooth Module

This module will only be used if a second glove is used. An MPU-6050 component will be attached to the second glove where the flex sensors will be connected through a Bluetooth transmitter. Another option is to use another microcontroller (Arduino Nano 33 BLE Sense) that will connect to the host microcontroller on the left hand. Since the microcontroller has a Bluetooth, it makes the overall project more mobile.

Week 5

Goals

This week was spent defining the goals and scope of the sign-language design in terms of engineering specifications, success criteria, and an updated block diagram.

The main objective of this senior design is split into 2 modes:

ASL Fingerprinting

This mode takes American Sign Language finger-spelling inputs from the flex sensors, converts voltage data into letters through machine learning and then forwards that to a text-to-speech converter to produce audible sound. For example, should a user finger-spell a word; each recognized letter would be held in a data buffer. When the user stops signing, the data is processed into a predicted recognized word. This word is sent through RAM to the text-to-speech module where it will be pronounced through the speaker. The mode is set to demonstrate the devices capability of differentiating the simple yet similar gestures of the American Sign Language alphabet. The ability to express acronyms should not be possible at this time.

ASL Gesture Signs

This slightly more complex mode would be a quicker way of producing words from sign-language using gestures, not finger-spelling. Unlike ASL fingerprinting, this mode would recognize words and phrases from commonly known American Sign Language gestures. Due to more dynamic movement of the hands; multiple data points need be gathered for each gesture as well as many more samples. For demonstration purposes, about 20 - 30 of the most commonly used phrases will be taken for sampling and machine learning. This end-goal is meant to demonstrate what the gloves can be capable of.

Success Criteria

A success criteria table was created and placed under the Engineering Specifications tab. The table is split into 4 categories: Comfort, Functionality, Mobility, and Appearance.

Comfort

Comfort pertains to how the glove feels on the user's hand in terms of comfort. The type of material used and placement of the components will affect this criteria. An excellent glove would be make of a comfortable material with components placed in a way that do not compromise hand movement. A bad comfort glove would be made using a cheap, uncomfortable material with loose component placement that hinders gesture recognition.

Functionality

Functionality focuses on the speed and accuracy of the glove(s). This pertain to the main success criteria of the proposal. Excellent functionality has quick response times and gives accurate predictions with a 95% success rate. Both modes of the gloves work, correctly recognizing letters and gestures. Good functionality will have similar qualities to a slight lesser extent; fingerprinting works well whereas ASL phrase gestures are guessed correctly 70% of the time. A Okay functional glove would have slow gesture response times; with ASL fingerprinting working correctly but ASL gesture recognition only working 50% of the time. A Bad glove would barely be functional, with neither modes accurately predicting letters, words or phrases.

Mobility

Mobility revolves around the freedom to use the gloves during connection the the control module. Excellent mobility consists of wireless communication to the micro controller via Bluetooth allowing for the freedom to make gestures. Components are also tightly secured to the glove. Bad mobility features stationary use of gloves such as being dependent on a PC; this results in no mobility.

Appearance

This category rates on how the glove looks. An excellent appearance glove would have components concealed or presented in a manner that looks like a retail unit. A bad appearance glove would be loosely constructed with exposed components.

Updated Block Diagram

The block diagram was updated to include a better representative flowchart that correctly shows data flow along with a brief description of each node. The biggest change done was the addition of a Bluetooth Transfer Module. It was decided that a second glove should be added to better represent American Sign Language gestures as well as finger-spelling. Gestures made would be transmitted wirelessly from the Bluetooth transmitter to a receiver connected on the microcontroller. The addition of a second glove will be based solely on the success of

single glove fingerprinting.

Week 4

Sign Language Machine Learning

-

Many hours were spent this week researching and constructing code for a sign-language glove machine learning demonstration in which the model is trained over a wide number of datasets ranging from A-Z. The model is made to guess which input corresponds to the correct output label (A-Z). Implementing the text-to-speech section of code, however, must be done at a later time as most of this week was spent learning and creating this demonstration code.

-

The code was written in Google Colab, a cloud notebook that allows you to write and execute Python in your browser. Colab includes many libraries, needs zero configuration, can run Tensorflow and is easily shared among group members.

-

The first step was to create datasets for training and testing. Training data containing 400 data samples per letter and testing data taking 100 samples from each letter were created in Excel and converted into a csv (comma separated value) for code program implementation.

-

The libraries were implemented and files uploaded to the program where they had to be formatted and arranged in such a way that the model would take and understand it. The files were uploaded and the labels (letters) were popped off the dataset so that data would be paired up against it once training was executed.

-

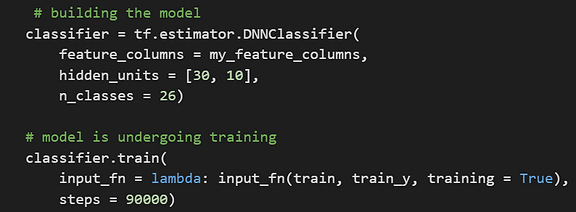

Next, the DNNClassifier model is used, a "deep neural network" TensorFlow Python API that predicts discrete labels as classes. The model builds a feedforward multilayer neural network that is trained with a set of labeled data in order to perform classification on similar, unlabeled data. The model specifications written were from sources; knowledge on this is still rudimentary so the model created was to make sure the code just "works".

-

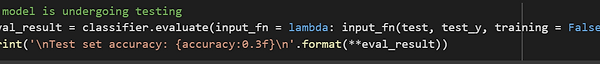

When testing the model varied a lot, ranging from terrible to good accuracy. Note that the input data chosen were written with a slight pattern so that the model could pick up on those subtle details. The losses for this trained trial was at 1.12 which is bad since the goal is to get it under 0.5.

-

Prediction code was written where the user would enter data for the input and the output would have a prediction along with it's percentage in that prediction. Of course, when the actual project is underway, the optimized code will receive data from the serial bus on glove. The code written here merely shows that it could be done. More research is needed to optimize and improve this project.

Week 3

This week was spent doing deep research on the final two proposal ideas - Controlled Reentry Weather Balloon and Sign Language Gloves.

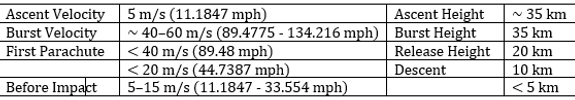

Controlled Reentry Weather Balloon

For the controlled descent proposal necessary limitations and average measurements were researched and recorded. For example, the table below shows average velocity of payload descent at different altitudes.

Two techniques that allow high-quality measurements during ascent & descent at similar controlled rates of speed were researched: Single balloon technique utilizes an automatic valve system to release helium from the balloon at a preset (ambient) pressure. The double balloon technique adds a parachute balloon to control the descent of instruments after the carrier balloon (used to lift the payload) is released at preset altitude. The main reasons for controlled ballooning are to prevent pendulum motions during ascent, to measure clean, unperturbed air during similar ascent & descent speeds to obtain two vertical profiles. A disadvantage of controlled descent ballooning is that payloads could travel more than twice the distance from the launch site compared to burst flights.

The equation to determine parachute impact speed will be used to solve for a descent velocity that results in the Drag force equaling out payload weight. This guarantees all forces are equal, thus preventing parachute acceleration. FAA regulations (FAA Part 101 –(a)(4)(ii) and (iii)) require no more than 6 pounds per payload, and no more than 12 pounds overall total for multiple payloads.

where: W = payload (+ parachute) weight D = drag force rho = atmospheric density V = velocity S = drag area (for parachute,

Circular Area = Pi*radius^2) CD = drag coefficient

Calculates the resulting radius (and diameter) of the parachute to result in a desired landing speed.

The use of para foil was looked into as military attempted controlled descent this way but issues included a tendency for the system to develop a flat spin, proving to be unrecoverable or the para foil will not inflate due of low air density and easily tangled lines. Lastly, cross range provided by grid fins were found to act like trim surfaces, with the payload acting as the steering surface. On the Falcon9 reentry stage, grid fins act as the critical control surface during some phases of the earlier part of the reentry. Grid fins effectiveness is a function of velocity of the airstream. The effectiveness of grid fins as a control surface is broken down by stages below:

-

Out-of-the-atmosphere: useless, as insufficient atmospheric mass for interaction.

-

Atmospheric entry: very beginning of "thicker" atmosphere. Grid fins limited by remarkably thin upper atmosphere.

-

Encountering denser atmosphere: stage surfaces begin to heat excessively, sufficient to cause damage if unaddressed. Grid fins are unneeded in this phase.

-

High-altitude, high-velocity supersonic descent: Grid fins operate well in the supersonic flight regime, and are very useful here, as well as the only method the rocket has for control authority at this point.

-

Transonic flight: the rocket transitions from supersonic to subsonic velocity ( due to the atmospheric drag continually slowing the descending stage), encountering a great deal of "transonic buffet", part of the rocket's surfaces are in supersonic flow while other parts are encountering subsonic flow. Grid fins are generally quite ineffective in the transonic regime.

-

Subsonic descent: once stage is below ~Mach 0.8 - 0.9 velocity, grid fins are once again very useful and the only control surfaces available to help "point" the rocket. The rocket continues to decelerate initially due to atmospheric drag, up until the time that the force of gravity on the descending stage is equal to the force of drag on the stage; at this point, the stage reaches what is called "terminal velocity." The grid fins are useful during all of this.

Sign Language Glove

Sign language has no universal language so they come in different forms. However, the one being used for this project is ASL (American Sign Language). From research, I divided ASL into 2 forms; there are ASL signs and ASL fingerprinting. ASL signs are expressions that convey a word or concept and ASL fingerprinting has it that each letter in the English language is represented as a gesture. For the project, developing ASL gloves based on fingerprinting would prove to be simpler. We would only need to worry about the 26 gestures and also digits (0-9).

Machine learning is also imperative to this project because when the gestures differ between user to user; one person's "A" maybe different from another person's "A". There has to be a process where the script learns how each gesture looks like and will predict the correct gesture the user's signing with little to no error. I was able to find a open-source software called TensorFlow - it simplifies machine learning and has an API (Application Programming Interface) that can be commonly used for a variety of applications. The type of machine model need for this project is a support vector machine(SVM)/support vector classifer (SVC) that organizes and attributes each gesture(feature) to a letter(label). SVMs use classification algorithms that are normally between 2 groups but can extend to multi-class.

The machine learning logic would work like this - we would would take flex sensor and MPU-6050 data to be filtered/formatted through averaging and variance (subject to change). For each letter/label, we would take 500 samples which is enough for the ML to train over that datasets. Assuming we are collecting data from 26 letters and 10 digits, there would be 18000 samples. After data collection is complete, 80% of data will be used for training while 20% will used for testing. Perhaps there could an option for new glove users to input their own gesture data although they would input data for several times for each gesture rather than 500 times. The process should take roughly 30 minutes.

The models found online were similar except that the datasets used were image processing whereas our data were based on flex sensor and MPU-6050 data. The only task that would take time would be adjusting code to take in our datasets through filtering and data allocation.

Week 2

-

After sharing our ideas to Dr. Ejaz, our proposal ideas were reduced from 7 to 4 (sign language gloves for the deaf, echolocation gloves for the blind, reentry weather balloon, and space flight control station). Etin-osa handled research for the sign-language gloves and the space flight simulator while Joseph handled the reentry weather balloon and echolocation gloves for the blind. Deeper research was performed from these 4 topics: creating block diagrams, researching parts and an overall understanding for these topics were achieved. The Wix website website was updated with progress and findings.

Week 1

-

Wix website was created and partially filled. Several ideas were brainstormed and researched for further understanding. This included a sign-language glove translator, a robotic tracker and fire extinguisher, a windshield with turned-based instructions, a low-cost navigation HUD for vehicles, a controlled reentry weather balloon, space flight control station, ultrasonic echolocation gloves, and a car seat monitor.