December 2, 2020 (Week 15)

This week, the presentation and videos for SignGlove, were completed. The initial parts of the presentation included sections from the proposal; the later parts of the presentation included many sections from the design report such as hardware implementation, software implementation, troubleshooting, success criteria results, non-technical aspects, and future improvements.

The link to the presentation is below:

https://etinosaotokiti.wixsite.com/seniordesign/copy-of-presentation

Videos were also recorded of SignGlove that demonstrated both of its functionalities. The clips were combined into one video.

November 25, 2020 (Week 14)

This week, much time was spent on improving the model's accuracy, troubleshooting the Arduino, performing the success criteria tests and also wrapping up the report to account for all these things.

Combined Gesture Model

The combined model with all 26 classes, a RELAXED class, and 20 phrases were completed and tested for accuracy and verification. A final confusion matrix was made along with some graphs and on overfitting, underfitting and loss overtime for analysis and interpretation on the report. The pictures below show images of the matrix and graphs.

Regarding the confusion matrix, the image could not be zoomed in enough to show the actual numbers of each of the predicted labels without it omitting some of the labels on the graph. Just note that the numbers along the trend line represent a notation of 1.2e2 which translates to 120 samples. That is the maximum number of predictions the model can get right. Looking at the matrix itself, D, Sure, and Will seemed to have low or no numbers indicating that the model could not interpret them as well. However, this wasn't the case when performing the success criteria. For instance, the letter "D" predicted quite well for all of the 50 trials; "Sure" did okay with it's predictions with a 70% percent success rate. The only gesture that did closely to what the matrix showed was "Will" which had an abhorrent 40% success rate. The only reason that can be thought for this is the noise captured during live predictions and that the testing data used to show this models' accuracy wasn't in these labels favor. Likewise, testing data does not give an 100% depiction of how the model would behave during real-time predictions. The case just described is an example of that. A picture of the success criteria results are shown below.

Letter Model

The letters and RELAXED class from the combined model were taken and developed into it's own model. The training and testing datasets were taken directly from the combined datasets to provide consistency and to examine how the letters would react on their own. The confusion matrix for the LETTERS model is shown below.

Unlike the GESTURE model, this matrix depicted some of the results that were present in the success criteria. The ONLY difference are that letters "S" and "T" could not be predicted as they were difficult to predict due to their sign similarities to each other and also the letter "A" which was the predicted value shown instead most of the time. "U" and "V" could not be predicted as well due to their similarities with "R" as well. Out of the entire alphabet, these 4 letters were the only ones that could not be predicted to their sign nature and model. A graphic of the success criteria results are shown below.

Phrase Model

The PHRASE model was extracted in the same manner as the LETTER model with all 20 gestures and the RELAXED class. This model displayed the most mixed results out of the other 2 models. The reason is the same as the reasons listed in the GESTURE explanation; noise and the testing data used to test the data. "Hello" as shown in the matrix, was the only phrase that depicted how it behaved during the real-time predictions. Moreover, in the confusion matrix, phrase like "Wait", "Will", "Drive", and "Hungry" were all met with mixed results with a success rate ranging from 44%-60%. I will admit that "Wait" in the matrix was shown with a 1.1e2 (110) below which gave a bit insight as to how it would perform, however not enough. A success criteria for the PHRASE MODEL is shown in below the confusion matrix.

Videos

Letters

Phrases

November 20, 2020 (Week 13)

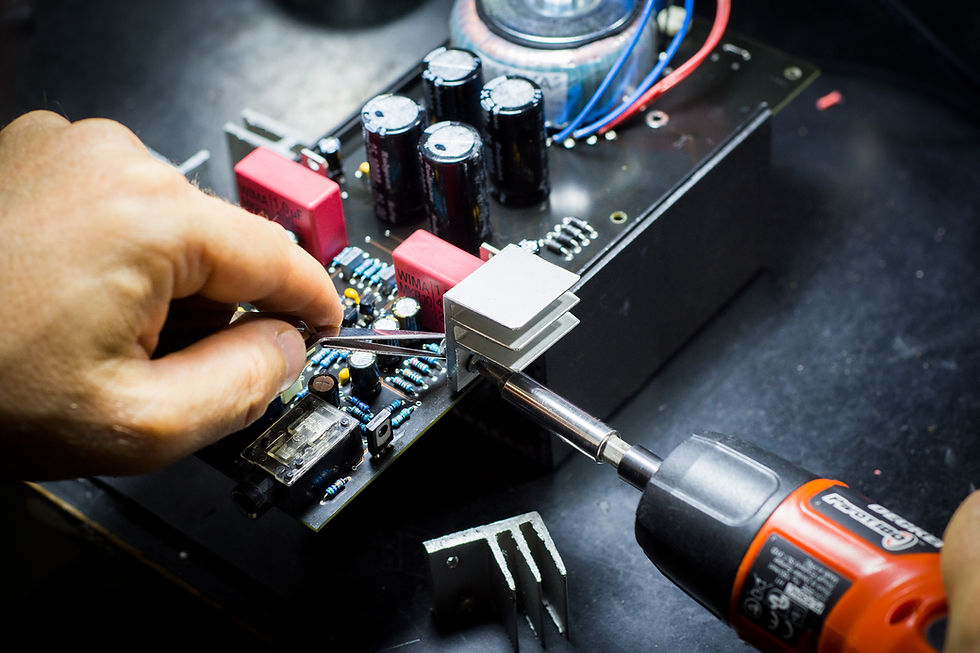

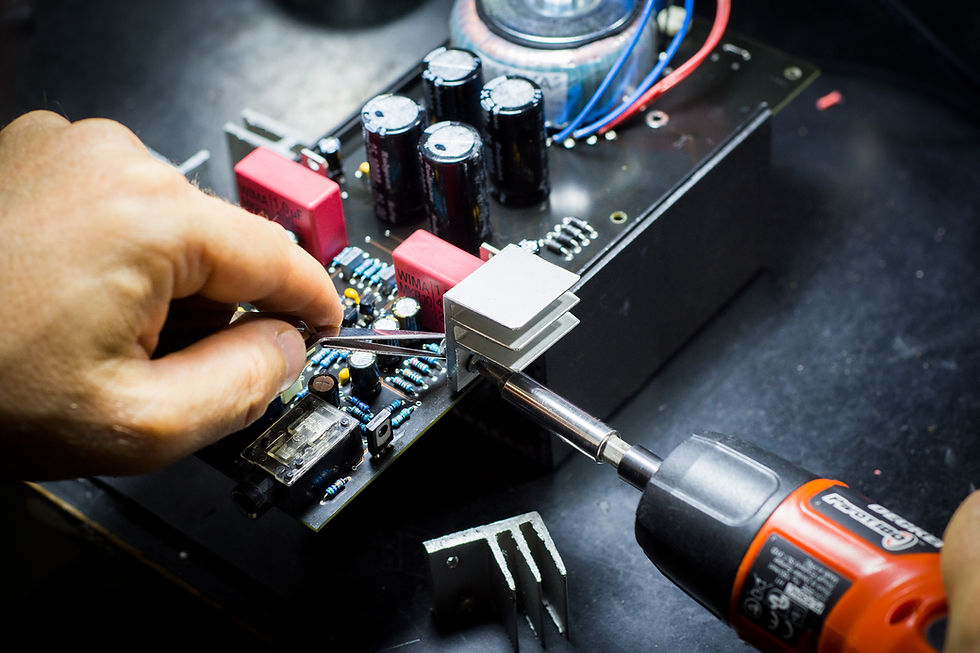

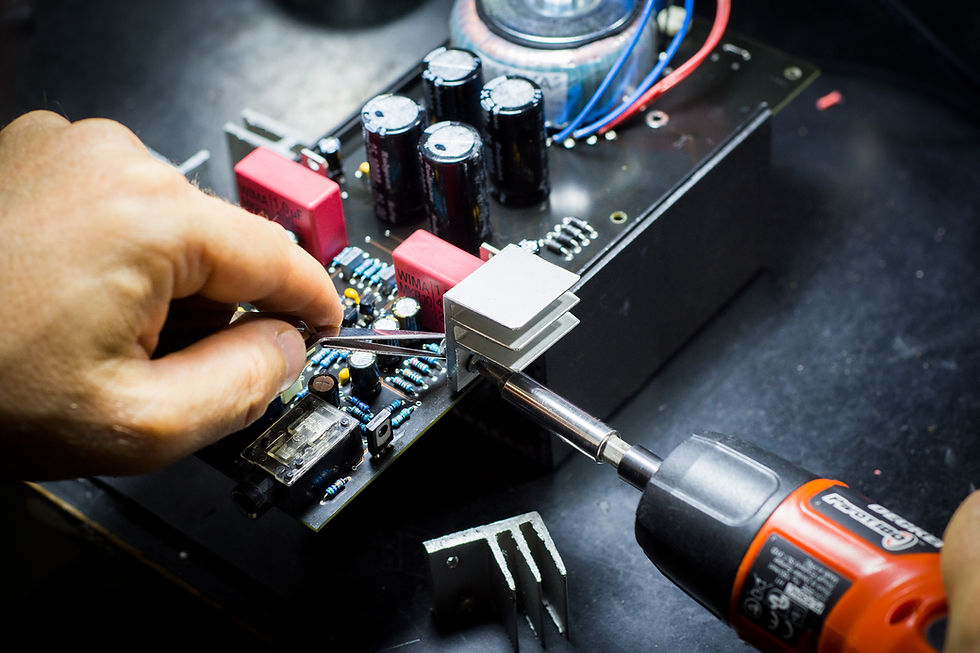

This week, an unfortunate event occurred. The microcontroller, during gesture testing, happened to have a power surge and it fried the board. Luckily, the flex sensors and other related devices were fine. The cause for this seemed to be the contact of 2 pins that led to this. This put the team a little behind because another one had to be ordered. Fortunately, the device arrived on Wednesday and it was assembled the same night. Testing and configuration was done of Thursday evening. So far the device seemed to be delivering output both from the OLED and EMIC2. The remainder of the week was put towards creating new datasets with the new voltage values followed by training and testing. Three datasets were made which were MOBILE, LETTERS, and PHRASES. MOBILE is just the LETTERS and PHRASES combined. The idea was to examine how accurate and better the glove would perform if the datasets were split instead of being put together. However, the primary focus was improving the MOBILE dataset. The video below shows the glove in action.

The glove from the video performed using the model below. The model used standardization for pre-processing and trained for 250 epochs (cycles). The confusion matrix is below. The other confusion matrixes after this one are the ones for the LETTERS and PHRASES. For the success criteria we plan to use these other models to compare and contrast their accuracy rates with the combined one. The report was also updated this week. Specifically, chapter 3 where the process of machine learning training and testing was talked about in depth. Due to the very limited time that is left, this weekend is planned for success criteria tests and report updating. Tweaking and configuring the glove is also still being done. One is for the LETTERS, PHRASES, and MOBILE in that order.

The Emic 2 Text-to-Speech Module is the multi-language voice synthesizer used to convert the stream of digital text passed by the MCU into natural sounding speech [20]. The output sound comes from an 8Ω speaker connected to the on-board audio power amplifier through the 3.5 mm audio jack. The module receives 3.3V power from the MB102 Power Supply Module and communicates to the MCU through serial interface at Digital Pins TX1 and RX0.

Wiring the Emic2 module to both the microcontroller and audio amplifier was very simple. Both boards share power from the power supply, serial communication from the Emic2 connects to the Nano 33 BLE’s digital pins and its speaker output connects to the amplifier. A detailed connection diagram for both components are listed below in Figure 3.2.

Emic2 Troubleshooting

Due to the nRF52480 in the Nano 33 BLE, the SoftwareSerial.h library recommended by the manufacturer could not be utilized due to the controller not requiring its use. Instead a modified library (EMIC2_UART.h) provided by shamyl was implemented to communicate to the Emic2 through hardware serial utilizing the Universal Asynchronous Receiver/Transmitter (UART) peripheral [36]. Initially the Emic2 was outputting its audio at a low volume. The input voltage was checked as well as voltage divider values at the analog pins. It was realized an amplifier was required to boost the output volume. A PAM8402 that was readily available was used to boost the audio output volume to a reasonable level.

Lastly, the glove was rebuilt using the new microcontroller. All hardware components were tests and verified, then passed over to Etin-osa to complete training implementation.

November 13, 2020 (Week 12)

This week much work was done with the model implementation into the Arduino as well as addressing some of the problems that plagued last week .

For starters, a script was written to automatically collect gesture data for the different letters set in intervals. A gesture would be collected for each class every 10 seconds; the loop would go through every class in the array until completion. This is for all 47 classes (A-Z, RELAXED, 20 Words).

One major problem that was solved was making the flex and IMU work together, or in other words, putting it into an vector and sending it into the Input Tensor at the same time. With that issue resolved, the next task included combing all the 47 classes together to be put into one model. However, this proved to be quite an issue for a little bit. For instance, whenever the model was integrated and uploaded into the microcontroller, it would cause the Arduino to freeze up (this was indicated on the OLED but that will be touched upon later). The Arduino IDE itself would lag once the Serial Monitor opened up. There were many methods that were tried to fix this issue. Conserving memory was tried which included placing F() brackets around serial prints to put less of a load on the SRAM. Changing certain variables into #defines were also done since they take no bytes compared to int and floats which take 1-4 bytes. Even so, Virtual Studio was installed as well to test out if the problem was from the Arduino IDE software.

There was also the possibility of changing the the model. Specifically, this included either:

A. Reducing the number of words being performed thus finding the cap where it doesn't freeze

B. Splitting the model into 2 demonstrations with would be letters and words.

To prepare for these possibilities, for models were worked on (FULL, CAP, LETTERS, WORDS);

FULL - includes all the gestures and letters

CAP - number of classes that can be included in the model right before freezing

LETTERS - all the letters including the "RELAXED" class

WORDS - all the words including a "RELAXED class

However, when changing and reworking the model to find the CAP, the microcontroller managed to take ALL the classes which was odd. The only possible reason for this could be due to the number of hidden layers between the FULL model that worked and the FULL model that didn't.

The model above is the one that didn't work and the one bellow is the one that did. It appears that the since the above model had 5 more hidden units than the one at the bottom, it didn't work. Those 5 extra units must have been or contributed to the slow-down. Hopefully, accuracy can be improved without having to increase those units. The OLED was used in all of this to test functionality of the glove; when the serial monitor wasn't working, the OLED was relied on to see if the an output was being shown.

Regarding the OLED, there was an issue with an output being shown using the microcontroller mounted onto the glove. In fact, there was not display coming on the screen. Voltage, continuity, and an I2C scanner was used to confirm if the issue was with the wire or I2C channels. To no avail, there was no issue with any of these. Moreover, BOTH of the OLEDs worked on the OTHER microcontroller that was on the breadboard while NONE worked on the one on the glove. The only probably cause is the microcontroller itself.

The OLED from the glove itself is seen to be working with the microcontroller on the breadboard. Due to this, the code was temporarily edited to have the output shown on the serial monitor at a cost of visualizing the class percentages.

The rest of the week was spent working on accuracy and configuring the model to interpret the correct classes while keeping the model itself under the hardware's apparent limitations. The confusion matrix was changed and rewritten to accommodate for the increase in classes; it was made bigger. The matrix below is for the 47 classes with both IMU and Flex inputs. Preprocessing was not used as the results achieved were fine. Although, tweaks and edits will be made to ensure accuracy reaches its highest level.

Code is completed and is left up to troubleshooting and bettering accuracy. Although, one issue that plagued this week was that the library used for the EMIC was causing problems and was no longer compatible. Hours were spent trying to find a suitable one; some of the libraries found either did not suit the microcontroller's architecture or needed a specific file from the directory even though it was already there. Based on the forum's some say they are Arduino IDE bugs. Eventually, one was found and it was a EMIC2_UART Library on GitHub [1]. The link to it is in the description below.

The goal next week, is to replace the necessary parts on the glove and mount every piece of hardware on there. Success Criteria trials will also start next week; increasing accuracy and troubleshooting will be also done as well.

The link to the GitHub site:

.jpg)

August 28, 2020 (Week 1)

This week Etin-osa ordered the OLED display and gloves needed for the project. More time was spent working on the script reading LSM9DS1 IMU data. The Time & Effort, Engineering Specifications and Weekly Meetings portions of the website were all updated for Senior Design.

November 6, 2020 (Week 11)

This week was spent working evaluating, testing, and troubleshooting fingerprinting and gestures for the sign-language glove. A dataset was made combining both the flex voltages and IMU data. This was completed in excel. The graphic bellow shows the features for each class; of course, the only onw seen in the picture is the letter, A. The following letters bellow it go all the way to Z. There is also a "RELAXED" class at the end that used to tell the model that the glove is a calm state.

The columns ranging from F1 to F5 represent the voltage values for each class. The next 3 columns with aX, aY, and aZ represent the accelerometer data from the IMU. The last 3: gX, gY, and gZ, represent the gyroscope data from the IMU as well. The reason for so many columns (features) was to greaten the differences between each class so that the model could differentiate between all of them. So far, what was done was for the fingerprinting. With a little more preprocessing and tweaks would increase the accuracy.

However, when going to implement this into the Arduino, there was no output being shown at all. It's suspected that when inputting data, the Arduino has a hard time taking different types of data (ADC and IMU at the same time). More editing is being done to resolve this issue. Some of the code written is below.

The figures above show the code for the taking input data from the flex and IMU data. The one below it is the input data being preprocessed before being passed into the input tensor. This is because when performing the model, data was preprocessed as well; so when inputting data real-time, data has to replicate that as well.

Due to this problem, troubleshooting was then done separate on fingerprinting and IMU data. Fingerprinting had good results; IMU data was recorded and tested but more tweaking and troubleshooting has to be done to increase accuracy.

This is for the IMU. The accuracy for this obviously still need work before it can be transported to the glove.

October 30, 2020 (Week 10)

This main focus of this week was trying to get the hardware parts onto the physical glove. The team met twice which were on Tuesday and Thursday.

Joseph contracted the casings and wiring for the glove which housed the microcontroller and also the resistors. Power came from the power module which contained the 9V battery. However, only 3.3V is being drawn from the microcontroller to function the system. A permaboard cutout was used to sit the 5 resistors (4 that are 15k and 1 being 50k). The wires were constructed to create a voltage divider with the flex sensor being the variable resistor. Pins A3-A7 were used to capture the voltage values. The casing was attached to the back hand of the glove using Velcro.

During the troubleshooting phase, the wires seemed to show different values and were reading incorrectly. The wire set up that Joseph configured was checked in terms of the voltage values received and the continuity as well. Everything seemed to be well. It was determined that the problem was originating from the ADC conversion/serial monitor. The other microcontroller was tested on the BREADBOARD and the values seemed to be correct with respect to the flex readings excel sheet. It's unsure if the problem is coming from the microcontroller currently on the GLOVE, or if it's something with the wiring. We realized that the the incorrect pins were used.

October 23, 2020 (Week 9)

Etin-osa and Joseph continue to meet weekly, this time the meeting was cut short due to being rained out at Valencia West Campus. The short 2.2" flex sensor was received and installed on the prototype glove assembly that was created last week. After meeting on Tuesday at Valencia, Joseph focused on improving the 3D-printed enclosures for the components where as Etin-osa focused on creating a wider voltage range for the flex sensors and implementing the OLED to display predicted letters and gestures with high confidence.

To further improve 3D printing quality and accuracy, time was spent researching bed leveling techniques, extrusion temperatures and feed-rate settings. Joseph was successfully able to reduce the maximum Z error from 0.299mm to 0.091mm, and the printers RMS Z error from 0.134mm to 0.037mm. This created a much flatter printing surface, helping first layer bed adhesion and reducing ghosting on layer lines.

The enclosures for the MB102 power supply module and EMIC2 were further improved on, scaled and printed again for glove placement. Mounting loops were also added for easy placements. All components now have a housing/mount to place on the glove. The models will continue to be worked and improved on, focusing on maintaining a small footprint, comfort and thinness.

On the software side, this week was spend on tweaking and changing training and testing dataset values to enable the model to generalize better. Resistors were also replaced with 15k to widen the voltage range. A new model was created and achieved better results due to adding more decimal places for better accuracy. However, on the Arduino side, gesture making was a bit finicky; this was due to the noise and jostling flex resistors on the glove (the glove would have been assembled if not for the rain).

The code for the OLED and the LED was written to evaluate the chosen letter based on the highest predicted value. It's likely that a threshold will be added so that gestures with "high confidence" will be passed to the OLED. As explained in the previous weeks, a gesture is made and sent to the OLED where letters will "stack" to make a word. During the first letter, a timer is activated to complete if no other gestures are made within a certain amount of time. Once that happens, the LED turns yellow and the word is then passed to the EMIC2.

October 16, 2020 (Week 8)

Etin-osa and Joseph met up this week to complete prototype glove assembly. This included but is not limited to installing the flex sensors onto the glove and creating wiring that allows for large range of movement to create labeled training data. The flex sensors are were put on the glove through the use of rubber hoses; they would allow for the flex sensors to be slipped in. The EMIC2 and a couple items were exchanged. The EMIC2 and power module had to be designed so instead, it was decided that wires would be attached to the flex sensors so that they could be attached to the breadboard for testing.

Values were captured for training in the machine model with normalization. All letters A-Z were captured and trained. Below shows the code for it and the machine matrix.

The results were fair. The reason for this was due to the lack of the IMU which would help differentiate between letters with relatively the same flex resistances but different orientation. Another thing that was decided that the fixed resistances would be changed from 10k to the value that would give the most flex sensitivity to greaten the volt range (this was done in excel). All in all, predictions were a bit finicky due to what was described above and that the glove was a prototype. Also getting the right letter took a bit time due to strict training datasets. Next time, it will be decided to use more varied data.

October 8, 2020 (Week 7)

This week was spent learning how to implement machine learning with TensorFlow Lite and Keras . After viewing and studying the IMU and Flex classifiers on Google Collab Joseph was able to understand and comment on the scripts to increase my understanding.

The first step was understanding that the Machine Learning approach has no conditional statements to classify its output, the algorithm learns the features that classify the input to easily classify new inputs and accurately predicts their respective output.

Kera's is an open-source API built on top of TensorFlow for interfacing with artificial neural networks (ANNs) [2]. Artificial Nueral Networks work in layers, with an input layer (our sensors), hidden layers which will carry weights for the nodes and the output layer, where the sign will predicted. In Keras this is done by creating an instance of a sequential object. Within the constructor an array is passed with 3 dense objects, these are our input, hidden and output layers. A dense layer is the most common type of layer in a neural network, connecting each input to each output within the layer. The units are the number of nodes (or neurons) in each layer. The input shape is the shape of the data that will be input into the model.

The activation function will define the output of the node (neuron) given a set of inputs, translation the weighted sum to a value between a lower limit and upper limit (usually 0 and 1). The activation function is typical a non-linear function that follows a dense layer. The relu (rectified linear unit) function used for the input and hidden layers applies the rectified linear unit activation function, returning a value between max(0, x) with element-wise maximum of 0 and input tensor [3]. Is the input is less than or equal to 0, the function will transform the input to be 0, otherwise the output will be the given input. The more positive the neuron, the more activated it is [4]. The default parameters can be modified to allow non-zero threshold values, this may be utilized later during troubleshooting. The softmax function used for the output layer converts a real vector to a vector of categorical probabilities, with an output range between 0 and 1. Softmax is often used as the activation of the last layer of a classification network because results can be interpret as a probability distribution, i.e determining which sign was gestured with the glove [3].

After configuring the architecture, the next step would be to train the network. To do this we must compile the model. This is where we optimize the weights within the model to minimize the loss function value, what the network is predicting versus its true label [4]. Every optimization problem has it's own objective, for our project the Adam algorithm is implemented. The first parameter specified is our optimizer. The Adam optimization is a stochastic gradient descent method that is based on adaptive estimation of first-order and second-order moments [5]. This was chosen because it is computationally efficient, has little memory requirement and is well suited for problems that have large data parameters, such as our total IMU (gyroscope x,y,z & accelerometer x,y,z) and flex sensor data.

The gradient of the loss function focuses on a single weight and refers to an error gradient, this means the value of the gradient is different for each weight since its gradient is calculated with respect to each weight [4]. The gradient descent algorithm changes the weights so that the next evaluation reduces the error. During each epoch this value is passed to the optimizer (Adam) and multiplied by the learning rate. The learning rate (0.001 - 0.01) is the step size that controls the model in response to the estimated error each time the model weights are updated. A learning rate set to the high side risks the possibility of overshooting, taking a step to large in the minimize loss function and shooting passed the minimum [4]. A very small learning rate will require much more time to reach optimal minimized loss. The next parameter specified is the loss function used. Then the metric that will be printed out when training is specified. Since we are looking for accuracy in gestures recognized, accuracy was chosen.

Lastly, the function that will actually train the model (model.fit) is called. The first parameter accepted is the training data, usually a numpy array that will hold all the data. The second parameter will be the labels to the first parameter. Validation split = 0.20 will reserve 20% of the training data set for validation [6]. Batch size is how many pieces of data will be sent to the model at once. Epochs is how many passes of the data will occur through the model. Shuffle = true will shuffle the data in a different order during each epoch. Lastly, verbose = 2 determines that we want to see Level 2 output the model is trained.

[1] https://blog.arduino.cc/2019/10/15/get-started-with-machine-learning-on-arduino/

[2] https://keras.io/about/

[3] https://keras.io/api/layers/activations/

[4] https://www.youtube.com/playlist?list=PLZbbT5o_s2xq7LwI2y8_QtvuXZedL6tQU

[5] https://keras.io/api/optimizers/adam/

[6] https://keras.io/api/preprocessing/

Etin-osa spent this week trying to get the IMU to be interpreted through the microcontroller through normalization. That part was achieved and output was shown. Shown in a picture below:

However, like last week accuracy was not as high as hoped. The model training results would be great. However, whenever it's tested on testing data, the quality goes down shown by the confusion matrix. The problem is believed to have stemmed from the way testing data is acquired and also the ambiguous nature of the training data. What the potential idea is to combine flex and IMU data into one whole dataset where each column is normalized on different scales to accommodate for the massive ranges. That and/or the accelerometer and gyroscope data can be averaged to remove a bit complexity from the set.

I'M AN ORIGINAL CATCHPHRASE

I’m a paragraph. Double click here or click Edit Text to add some text of your own or to change the font. This is the place for you to tell your site visitors a little bit about you and your services.

October 2, 2020 (Week 6)

This week was spent trying to get the machine model to interpret IMU readings from the Arduino Nano 33 BLE Sense. Some issues arose from the microcontroller itself and it did take some time away from the IMU work. The IMU gestures for training were captured using this script below that took a set of gestures once the accelerometer reached a certain speed threshold. The data was then trained using a model. The model interprets gestures with fair to low accuracy without any pre-processing. Standardization was currently being worked on for better accuracy. That was achieved through the python model, however trying to format the input data in C++ on the Arduino proved to be a challenge. Further work is being done; next week will look more into normalization and how that will help improve results on the IMU. Below is the code for the IMU and the results:

The code below shows the input data being sent to the model as well as the output for it as well. The goal next week, based on the proposed timeline is to combine both the flex and the IMU data together if all goes smoothly with normalization. One way of doing this is by having a data set with 11 features (5 for flex sensors & 6 for the gyroscope and accelerometer). However, I think I want to focus on getting the flex sensors full-functional in terms of inferencing all the gestures before moving towards the IMU.

Work on 3D printed design enclosure continued this week. A case to hold the SSD1306 OLED display was redesigned and printed. The print was originally designed just short of the actual size. Luckily the 3D model could be scaled within the Repetier interface where object slicing occurs. Time spent designing prototype enclosures will continue throughout the design process as improvements in comfort and hardware implementation arise.

An enclosure design for the MB102 breadboard power supply has begun. The power supply, battery, EMic2 with speaker will most likely be placed on the wrist behind the flex sensors, Arduino Nano 33 BLE and SSD1306 OLED display.

The Design Report was also worked on this week. Lots of time went into organizing the information from Chapters 1 and 4. Chapters 2 and 3 were inserted into the Design Report with information on research and contribution done up to this point. Contributions on processor module chosen, Inertial Measurement Unit implementation as well as flex sensor and OLED initialization were included. As more data is received and evaluated, the information in those chapters will grow. The Table of Contents and Table of Figures were updated, most of the references used have almost be inserted with those that haven't being marked in red with it's reference link.

Standardization

Normalization

September 25, 2020 (Week 5)

With the micro controller, all 5 flex sensors and text-to-speech conversion module on hand, connecting all components together and confirming functionality is the next step. Joseph confirmed all 5 flex sensor resistances through the multi-meter and in the serial monitor through the 10-bit value the voltage divider produces. 3 of the flex sensors were handed off to Etin-osa to work on making sure the Machine Learning algorithm recognizes and evaluates the flex sensors correctly. We attempted to meet up at the Hiawassee Library but were denied entry, this team desperately needs a area where we can collaborate on the project.

As Etin-osa worked on ML, Joseph began designing the prototype enclosure for the required components. The 3D printed enclosure will be designed in multiple pieces, this will be done to create mounting locations for all components. Autodesk Solidworks and Repetier (with CURA engine) was used to design and print the enclosure. The first design attempt is shown below.

After printing the first base it was noticed the that holder for the Arduino Nano 33 BLE Sense was not wide enough. Slider holders were created to hold the Nano 33 BLE. A cap is currently being worked on to house the OLED display as well as EMIC2. The location for Power Distribution and speakers have yet to be finalized. Pictured below is Version 2 of the 3D printed enclosure.

On the software side, 3 flex sensors were given to Etin-osa so that testing could be done on HIS Arduino Nano using a 3.3V input; the flex readings were read by the analog function which was used to find voltage, and the bend angle. The only feature that did not display accurate readings was the bend angle. Aside from that, everything else was fine.

Much of the time this week was spent trying to integrate the ML model into the Arduino. Note that the actual glove will have 5 flex sensors. However, due to prototype reasons, 3 were used for the sole purpose of getting the model to interpret them. A excel chart was made to show the different voltage ranges that could be achievable using different fixed resistors. The range for each flex sensor were only slightly different. For simplistic purposes, it was decided that 10k-ohm would be used as the fixed resistor.

In an event where flex sensors need to be more sensitive and/or a wider volt range is needed, we can settle for a larger fixed resistor.

Regarding flex capturing, flex sensor data was captured using a script Etin-osa wrote. This included training and testing data.

The script is shown below:

As for the machine model, a mock up dataset was created to test for its functionality. A total of five classes were created to test it. "A",B","C","D",and "RELAXED" were the 5 classes or possible outputs that the model could potentially predict. From the serial monitor, each of the classes would be shown followed by their probable percentage next to it. As you bend and change the flex sensors, the percentage would grow the highest for the class that is most identical with the position of the flex sensors that that class trained with. The others would either drop or steadily stagnate due to some of the similarities some of the other classes have with each other. A "RELAXED" state was introduced to let the system know that a sign wasn't being made.

Here is the code below:

Much credit has to be given to Pete Warden, Shawn Hymel, Don Coleman, Sandeep Mistry, and Dominic Pajak for providing example codes and also videos explaining how some of the code works [3]. This includes the libraries to use, how to configure the model, interpreters, memory arenas, input and output buffers and how to debugging check-ups [2]. The work done on Etin-osa's part included creating the datasets for the model, the model, and code for sending data into the model as an array [1,3]. Note that next week will involve the IMU side of input data which ASL signs is highly dependent on [1]. However much of the model integration work is figured out and done.

Below are the class probabilities as shown through the serial monitor:

Below are the sites for the information used in the model integration. Works cited can be found on the references page of this site.

Sites are below:

A

B

D

C

RELAXED

September 17, 2020 (Week 4)

All parts have now been received, this week the flex sensors were delivered and have been confirmed for functionality. Joseph added required code to read flex sensor output data to the previous IMU serial monitor script. The Flex Sensor has a resistance that varies depending on how much the finger is bent. Because of this, the Flex Sensor is combined with a static resistor to create a voltage divider. This will produce a variable voltage that can be read by a Arduino Nano’s analog-to-digital converter.

We determined a good value for R1 by analyzing expected values from the Flex Sensor. The flex sensor had a flat resistance of 8.26 kOhms and maximum expected resistance (resistance on a clenched fist) of about 19.89 kOhm. A 10 kΩ resistor was used for the voltage divider on the ground side and the flex sensor on the 5V side. As the flex sensor's resistance increases (bends) the voltage on A2 will decrease.

The script was first updated by initializing all input constants such as the pin connected to the voltage divider, 5V voltage source, unbent and bent flex sensor resistances and lastly the value of the voltage divider resistance.

In void setup, Joseph added the address for the OLED display and a serial message that post when unable to connect to the display. The display is then cleared and initialized with display text size, color and lastly the coordinates where the text will begin is defined. The accelerometer and gyroscope data is then printed to the OLED display. Joseph initially ran into issues with the OLED not working, it ended up being that the pins needed to be soldered to the Arduino, as the breadboard wires were not making a connection to the Nano 33 BLE.

In the loop function, the sensor is first read and then converted to its digital value. The analog voltage is converted into to a 10-bit number ranging from 0 to 1023. Next, the resistance of the flex sensor is derived from the voltage divider formula and displayed on the serial monitor. The flex sensors resistance and bend angle should be displayed. Currently there are spikes in resistances that occur during serial viewing, this is most likely due to non of the components being soldered together, all components are currently pinned into a breadboard for function confirmation.

Due to transportation issues, we were unable to meet this week. Etin-osa spent this week working on integrating the machine learning model into the Arduino Uno for testing and learning. The plan was to train a system to predict the letter A, B, and C using the voltage values of a potentiometer in a voltage divider circuit. However, since the Uno was not built for machine learning, work could not be done any further.

This week was also spent developing some of the tail-end of the program which involved 3 buttons that would behave as inputs and pass them to the OLED which would activate a timer and send them to the EMIC2 if no activity was found.

I'M AN ORIGINAL CATCHPHRASE

I’m a paragraph. Double click here or click Edit Text to add some text of your own or to change the font. This is the place for you to tell your site visitors a little bit about you and your services.

September 11, 2020 (Week 3)

Most parts required for functionality have been received, the flex sensors have been shipped and are awaiting arrival. Joseph researched and installed Autodesk Eagle to create a circuit diagram that is easily legible and well labeled as the library for web based ciruito.io was limited in creating a useful schematic. Learning to utilize custom libraries for Eagle was required to import the Sparkfun Library Github, Adafruit Library Github and DIY Modules Library into the project. The Emic2 module was the only component not found. After directly contacting the manufacturers Parallax Inc, I was informed no Eagle libraries were available but the Emic2 uses a standard 0.1" pin header which Eagle has available. The figure below shows the well schematic created using Eagle.

Next, Joseph worked on finalizing the Serial Monitor output script to confirm IMU data functionality. This entailed educating myself on the Serial.print() functions and LSM9DS1 library. The script was a success with the serial monitor neatly showing accelerometer and gyroscope output data. I began the script by including the LSM9DS1 library required to read sensor data and after serial initialization created a loop that would error indefinitely if the IMU is not found.

The first item printed on the Serial Monitor is the sample rates for the accelerometer and gyroscope in Hertz. This is not included in the void loop as it is static and only needs to be seen once.

Lastly, the accelerometer and gyroscope data is printed on the serial monitor every second. This can be changed by adjusting the delay value (1000 ms = 1 second). At first an attempt was made to print output data as a string, this was found to take up more lines of code and memory resources.

The final output is easy to read and understand.

The finger spelling flowchart, that was created last week, was edited based on the feedback and recommendations made last week. A instead of a "custom gesture", a timer was implemented into the flowchart as more practical solution.

Below will explain the process of the software flowchart in detail.

LED is Orange

-

The System starts when the power button is turned on.

LED is Green

-

The IMU, I2C, and ADC are initialized during the first 2-3 seconds.

-

The system then begins to collect input data (flex and IMU readings from the glove for pre-processing. This will be placed in a continuous loop that will prevent the device from stalling; the loop (timer) will reset every 0.2 seconds. When the user is not actively making a sign or gesture, their hand will be in a "relaxed" state that will not count towards predictions. This lets the system know that predictions aren't being made. When the user escapes from that "relaxed" state, the system recognizes that a prediction is going to be made.

LED is Red

-

Whenever a "recognizable" gesture is made within those 0.2 seconds of the timer, that data is sent passed and pre-processed so that the model can accurately make predictions. This also activates a watchdog timer that interrupts input data collecting while the prediction is passed so that any other gestures aren't being made during the brief prediction process (it avoids potential errors too). The predicted letter is then placed in a buffer that will be held for a certain amount of time - a timer set at 0.5 seconds.

-

While the timer is waiting for more letters, the LED will be green for that brief time. If another gesture becomes predicted again, the timer will reset and the LED will turn red again until the "letter" reaches the buffer again to be appended onto the previous letter.

LED is Yellow

-

If no other no other gesture passes within 0.5 seconds, the timer completes and the string in the buffer is then sent to the EMIC2 where speech conversion comes underplay and the audible word is said through the speaker.

The flowchart for ASL signs was just about the same except for minor changes in wording. The whole process is pretty self-explanatory if you understand the process for ASL fingerprinting. The only difference is that there is no buffer and that the speaker will produce entire phrases at once.

Discovered that an old Arduino UNO was still in possession. The EMIC2 was also tested and worked on for functionality. Looked at different settings with such as speeds, languages, decibels, etc. Speaker could be a faulty one due to the mishaps.

Currently working on mock up code for when input data is passed by a button that will be in a buffer before being sent to the EMIC2 after a certain amount of time. Below is some code for everything so far. Started to work on the OLED as well.

September 4, 2020 (Week 2)

After reviewing the website with Dr. Ejaz, all recommended changes were made. All relevant information in Design Testing tab was moved to Progress Log. All testing, results and photos will be posted here. The Timeline was updated to reflect current term dates. Actual Progress is now displayed next to Proposed Progress, easily tracking and reflecting project progress. The format for weekly meetings was also changed and implemented.

The Flex Sensors were ordered by Joseph while Etin-osa ordered the Emic2 text-to-speech- module as well as the required speaker and LEDs. The Design Report template was created, reusing any relevant information from the Proposal Report such as project hardware, software, budget, Engineering Specifications and requirements.

Researching was done on creating a circuit diagram. Utilities such as circuito.io, Proteus, Fritzing and Eagle were at with ease of use and large library of devices available for simulation and schematic creation. Circuito.io was the most user friendly but lacked a large enough library of devices to cover all components used. The imagine below shows all available components found using Circuito.io, missing components included the Emic2 text-to-speech module and Witmotion Inertial Measurement Unit.

Due to this a wiring diagram was created in Photoshop and more research will be spent this following week to create a schematic in KiCad or equivalent in Circuito.io. The wiring diagram create is listed below.

On the software side, a flowchart was made to show the entire process of the glove during operation. Block colors are meant to indicate the color of the LED during different phases of the process. Due to the user being deaf, visual indicators such as this are helpful in letting them know at which part the text-to-speech conversion cycle is at.

On the machine learning side, familiarity with other models on TensorFlow were examined. The Keras sequential model is a basic model for the API, however it seemed to work the best and proved quite good results. The images below are of the code and also the results relayed as a confusion matrix that showed a visual representation of the performance of the algorithm.

I’m a paragraph. Double click here or click Edit Text to add some text of your own or to change the font. Tell your visitors a bit about your services.

August 28, 2020 (Week 1)

This week Etin-osa ordered the OLED display and gloves needed for the project. More time was spent working on the script reading LSM9DS1 IMU data. The Time & Effort, Engineering Specifications and Weekly Meetings portions of the website were all updated for Senior Design.

Further understanding on Machine Learning was also learned through a site called "Deeplizard" that contained a playlist discussing the fundamentals of machine learning. This was watched just to solidify understanding of the concept. The link and the video playlist is listed below.

Link: https://deeplizard.com/

August 21, 2020 (Summer Break)

This week, Joseph spent time creating a basic script to read accelerometer and gyroscope data from the internal LSM9DS1 through the serial monitor. Like last week, lots of time was spent researching the data sheets and IMU library to confirm functionality. Although the script was successful, its output looked unorganized and needs adjustment for readability. The figures below show axis orientation in relation to the IMU and output serial data. The right-aligned row shows current accelerometer x, y, z acceleration data whereas the left-aligned row reflects current gyroscope x, y, z angular rotation data.

Etin-osa spent this week looking into sites and videos that explained and talked about converting TensorFlow models written in python into C++ to be read and understood by the Arduino Nano 33 BLE Sense. The videos below helped greaten that understanding; the following link also helped as well.

Link: https://www.tensorflow.org/lite/microcontrollers

The models learned the previous week was taken into practice. Parameters were played and messed with the examine with methods worked best. Although, that could change once ACTUAL values are taken from the microcontroller and the built-in MPU.

August 14, 2020 (Summer Break)

This week, Joseph ordered the Arduino Nano 33 BLE Sense. The Progress Log in the Senior Design portion of the website was also updated. Initial research concerning capturing gesture data on the Nano 33 BLE internal LSM9DS1 IMU was completed.

Etin-osa spent time learning more about machine learning and gathering a better understanding of hidden layers and classification. The video talked about the architecture of neural networks concerning the layer, weights, biases, activation functions, etc. The video below is listed.

Deep learning was also briefly learned; different models such as the sequential model was learned through the Keras API integrated into TensorFlow. Alternate methods of data processing, artifical networks, validation sets, neural network predictions/the confusion matrix, and saving and loading models were learned.